Overview

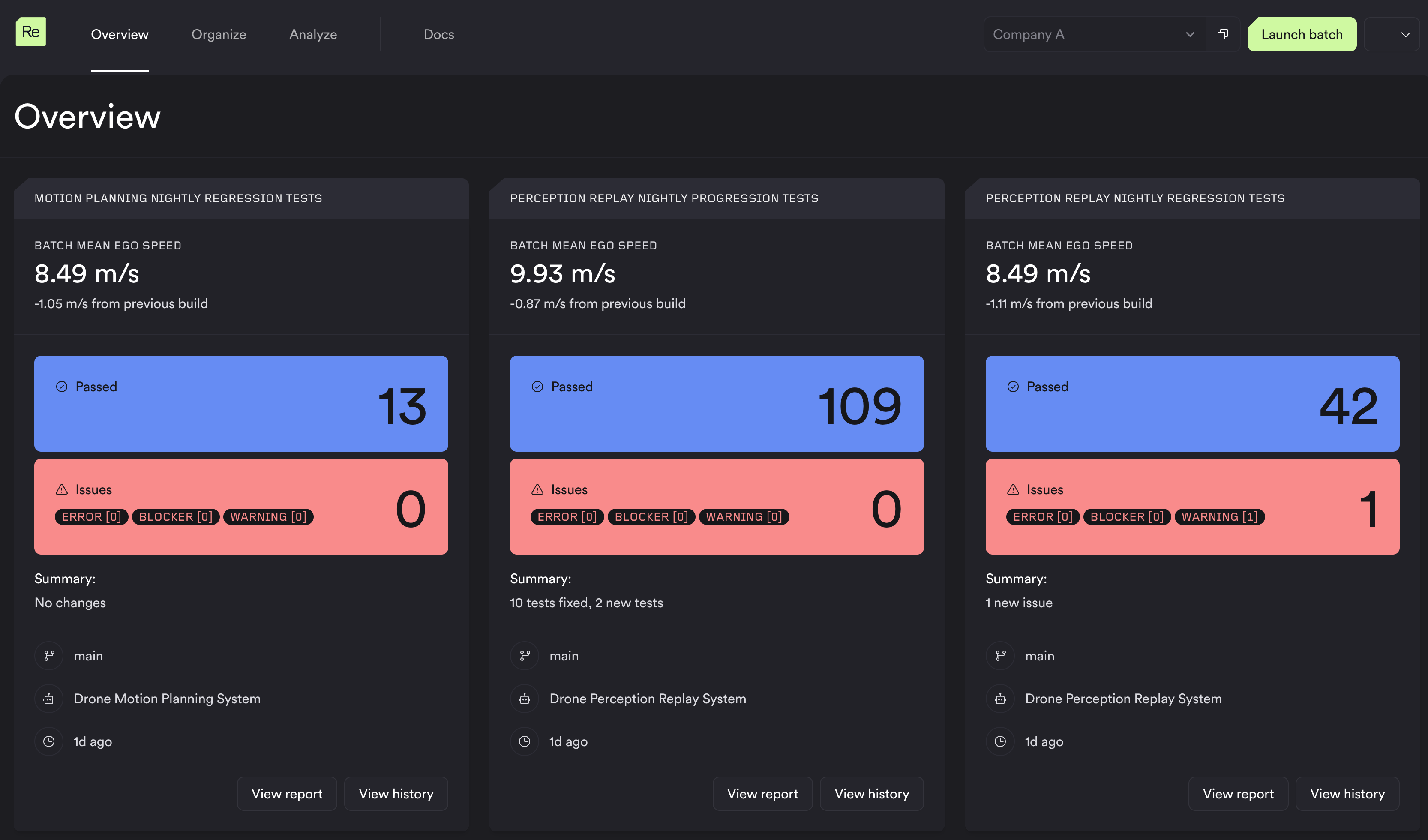

Now that we have our test suites defined, and reports running against them, we can setup the Overview page.

The overview page will show you test suites of your choosing, and how your tests and metrics are performing between the latest 2 builds.

What is the Overview page?

This page will compare test suite results against the latest 2 builds on your main branch for the given test suite and system. It will also contain some details to help you measure progress, and get an overall view of the health of your systems:

- How many tests have been fixed in the latest build

- How many tests have starting failing in the latest build

- How many new tests have been added to the test suite

- How have key metric(s) changed from the previous build

Setting up the Overview page

In order for a Test Suite to show up on this page you need to do 2 things:

- Have a report running against the test suite (completed in the previous step)

- Explicitly enable the test suite to show up on this page, by using the CLI:

./resim test-suites revise --test-suite nightly --show-on-summary true

That's it!

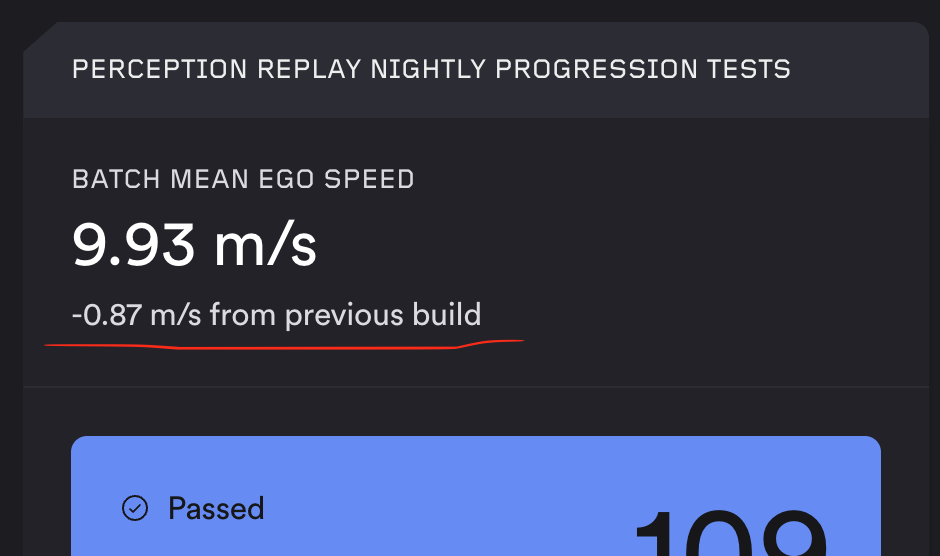

(optional) Selecting a scalar metric

For each test suite you can choose 1 key scalar metric you want to display. In this example we have chosen to display the "batch mean ego speed" metric. It will also show the change from the previous build:

In order for the scalar metric to show up here you need to add the special tag RESIM_SUMMARY during your batch metrics build:

metrics_writer

.add_scalar_metric("batch mean ego speed")

.with_value(9.93)

.with_unit("m/s")

.with_tag(key="RESIM_SUMMARY", value="1")

Notes:

- this metric needs to be calculated in your batch metrics, not job metrics

- only

SCALARmetrics are allowed, other metric types with this tag will be ignored - the value on the tag is currently unused

The summary page will retrieve this metric from batches run against the 2 most recent builds and display the diff automatically.