Managing Your Artifacts

This tutorial will cover the starting point for managing your tests and other data artifacts within ReSim. This is a quick illustration of what those concepts are within the ReSim app, and how you might use them for your robot.

Problem

When you begin to scale your offline testing, you begin to generate many artifacts in the form of experiences, versions of code to test, and metrics you want to collect. Often, you end up with many artifacts that are not relevant or compatible, so managing those artifacts becomes a burden. During development, you want to only see the tests that matter to you and your system, and ensure your metrics are managed correctly.

Before you run any tests and waste time and resources doing so, you want to ensure that your tests, metrics, and system build are all compatible.

Examples

This section runs through some examples of the above concepts, applied to an example basic autonomy architecture.

Systems

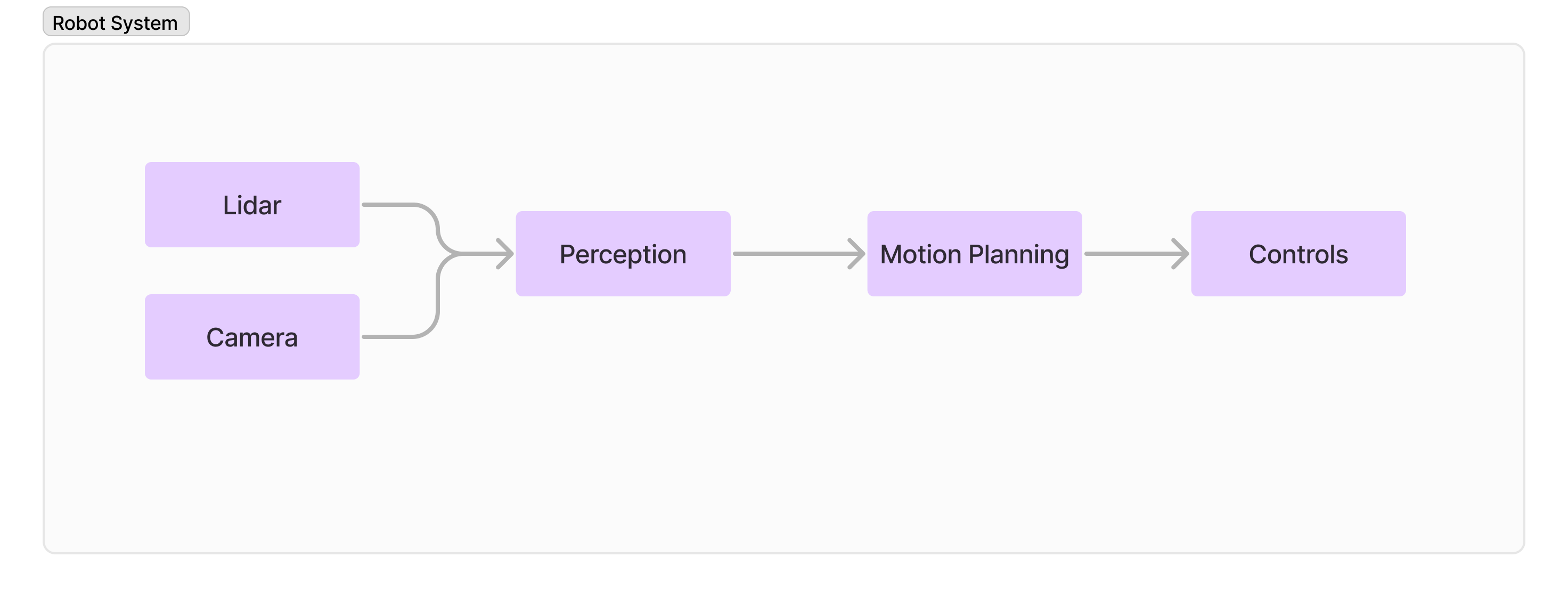

In this diagram, you see the sensors feed into a

perception module, which feeds down into motion planning and ultimately controls. This is a basic representation of the

pieces of an autonomy system, but sometimes these modules are combined or there are additional modules. The above

example can be defined as its own System, which represents the end to end modules that make up the on-robot system. In

the ReSim Platform, you could define a set of Systems as follows:

In this diagram, you see the sensors feed into a

perception module, which feeds down into motion planning and ultimately controls. This is a basic representation of the

pieces of an autonomy system, but sometimes these modules are combined or there are additional modules. The above

example can be defined as its own System, which represents the end to end modules that make up the on-robot system. In

the ReSim Platform, you could define a set of Systems as follows:

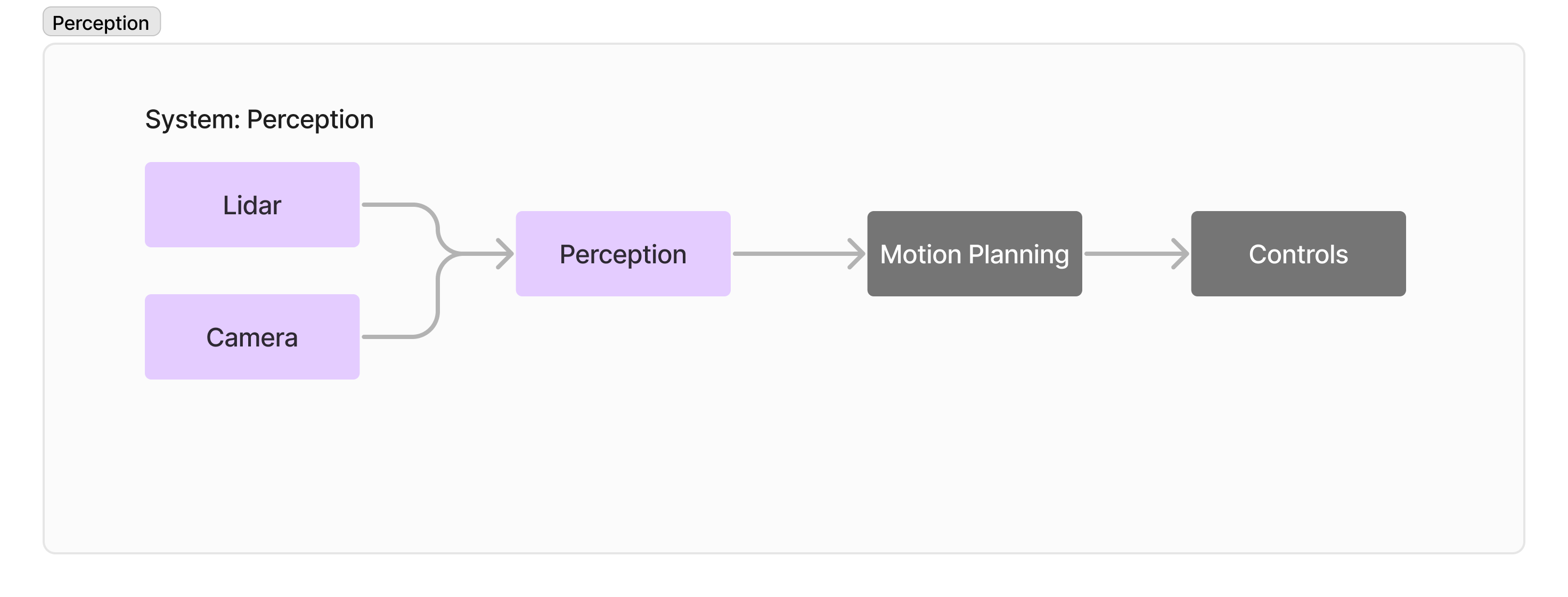

Perception: A perception system is likely concerned with detecting everything of interest and tracking those objects

over time. A perception system is likely to require high fidelity images (either real or simulated), but the metrics you

care about are likely to be independent of the motion of the robot. By defining a perception System, you can isolate the

tests you run and metrics you generate to only those that apply to a perception system.

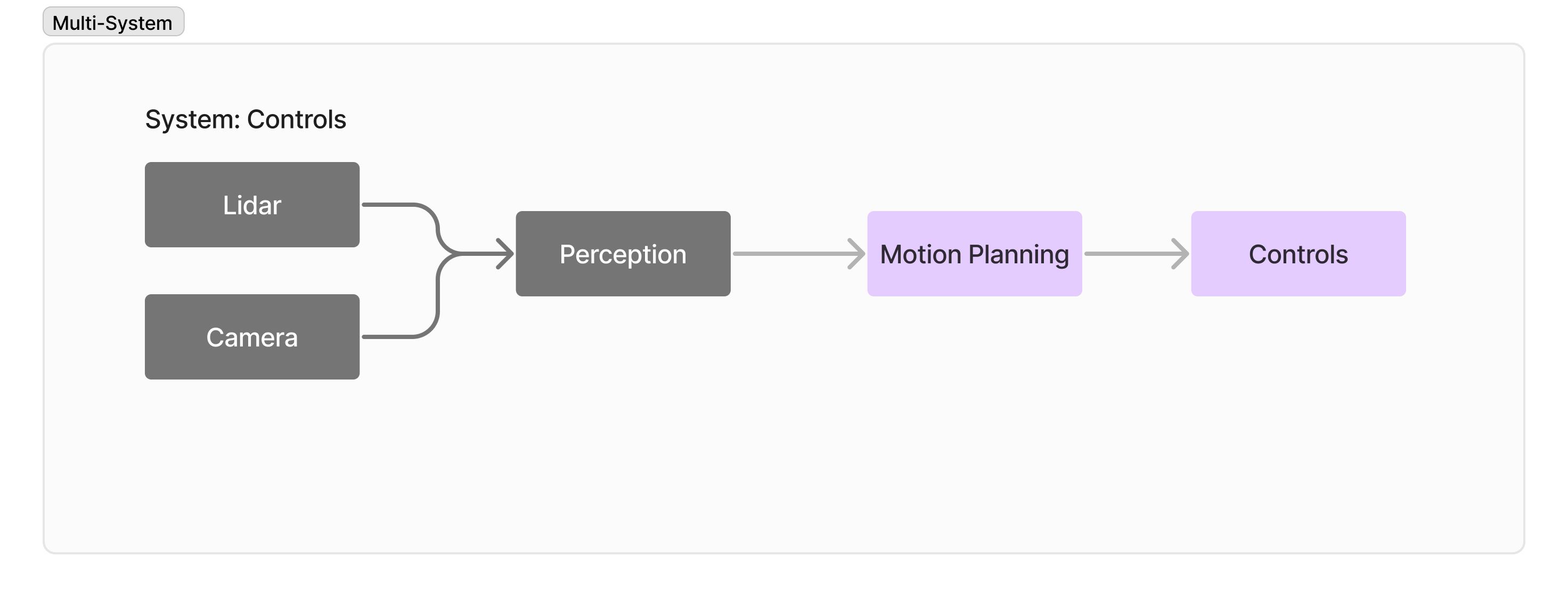

Multi-Module Systems: You can define a System in any way that is relevant to your robot. For example, the motion

planner and controller in a robot system are closely tied together, but separating them for testing can still be useful.

So you can define Systems for both individual modules, or the combination together to represent more of the realistic

interaction of those modules on the robot.

Metrics

In this example autonomy stack, we have defined two main systems: the Perception System and the Multi Module (Motion Planning) System. These two systems would have very different metrics. For example, if you are not processing image data, it doesn't make sense to attempt to compute image processing time. To support efficiently running metrics, you could define Metrics Builds for each of these Systems that capture the types of metrics that are appropriate such as:

Perception:

- Classification accuracy

- Precision & recall

- Time to detect

Multi-Module Motion Planning:

- Robot State and Derivatives such as pose, velocity, acceleration of the robot.

- Collision Checking

- Planning metrics associated with the plans produced and safety constraints