Introduction

-

Getting started

The foundations to see ReSim in action

-

Interacting with ReSim

The tools to interact with ReSim

-

Guides

Diving a bit deeper

-

Metrics

Understand and analyze your test results

What is ReSim?

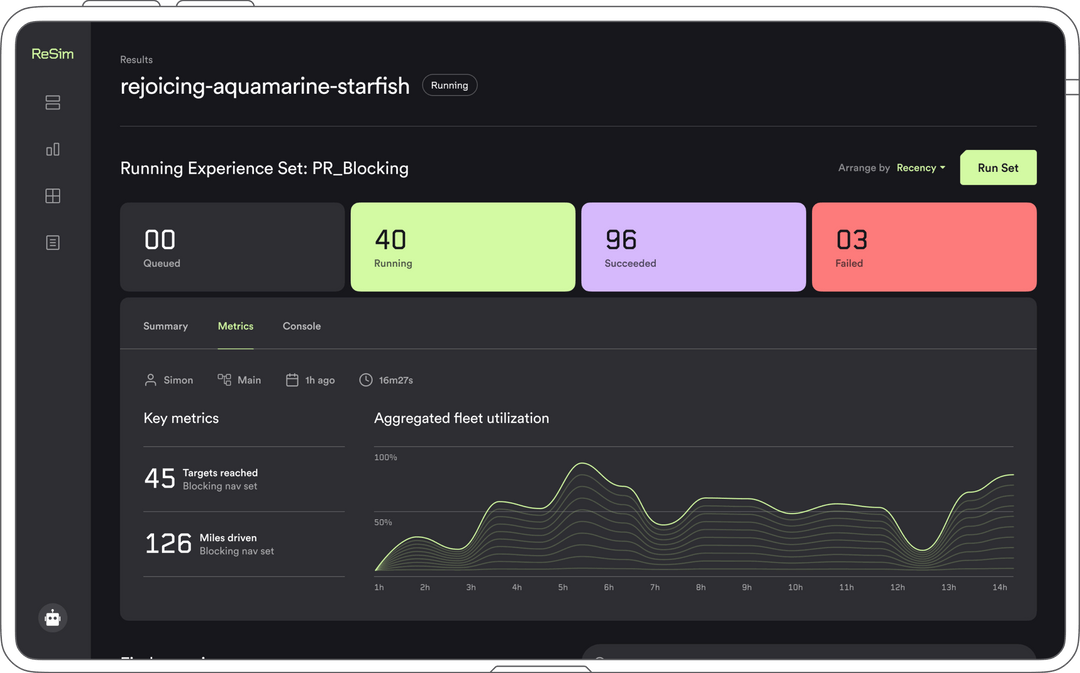

ReSim makes plug-and-play virtual testing infrastructure for modern robotics and AI companies. ReSim works with any simulation or replay software. It enables parallel cloud execution at scale and unlocks pass/fail, A/B testing and rich metrics. ReSim makes it simple to know whether your robotics/AI stack is improving before you ship.

Why Use Simulation for CI?

Developing autonomous systems is hard. Because we work with complex hardware and software systems, it is very difficult to know the effects of any particular change to the robot's software. Really, we are working with systems of systems, with changes to subsystems having potential knock-on effects from the systems that depend on it. The easiest solution to this problem is to try out each proposed change on the physical robot to see if it improves or degrades the robot's performance. As performance improves, this can also be a very high-fidelity way of verifying that the robot meets the requirements associated with its goals and environment. After all, we are testing each version of the software with the actual hardware. Unfortunately, there are a few reasons why real-world testing alone does not work well for many applications:

- Complex Environments: For many real world robotics applications like self driving or drone delivery, the robot is required to operate in an unstructured environment where unexpected agents and conditions may be encountered at any time. This imposes so many requirements on the robot that it becomes impossible to check them all on the real robot either because:

- Certain requirements can only be demonstrated in rare events which cannot be reliably encountered for testing purposes.

- It takes too long to sequentially test the combinatorially large set of requirements on a single robot, and it's often too expensive to buy enough robots to test the requirements in parallel.

- Safety: For certain applications (e.g. crewed spaceflight or self driving), it can be quite dangerous to test every requirement out in the real world. A failure and such cases could cause severe injury or death.

- Cost: Even in cases where the environment is safe and relatively structured, checking requirements manually can be costly, requiring the time and effort of highly specialized personnel to conduct tests and interpret the results. In addition, such testing usually involves a high latency (e.g. it takes 1+ business days to get metrics and feedback on a proposed change) which slows down autonomy development overall.

As a result of these issues, most robotics development efforts only verify a very small fraction of the complete set of robot requirements on each proposed change. This can yield a pernicious pattern where a change fixing one issue in a robot's behavior degrades its behavior in another, potentially less visible, way. Furthermore, even when this isn't the case engineers are discouraged from trying out experimental improvements if they think it could degrade some unrelated behavior, which stifles innovation.

In our view, one of the most powerful applications of simulation is to ameliorate this issue using simulation testing in continuous integration. This involves creating a set of "blocking" simulation scenarios in which a simulated model of the robot running its software (or even subsystems) is expected to always succeed. Then, each proposed change (e.g. Pull Request) to the robot software is tested against these scenarios and if any fail the change cannot land. This allows for many different requirements (including those that would be difficult or unsafe to test in the real world) to now be checked on every single change. This gives engineers the confidence they need to try out more experiments and accelerate their development.

In addition to this, broader sets of tests can be run at a regular cadence to assess the performance of the system over time and get holistic information about its behavior. This gives engineers and managers the information needed to formulate and execute an effective development strategy.

Practically, performing these tests quickly requires the parallelism afforded by cloud computing. The ReSim app allows users to quickly and easily set up such a continuous integration workflow with a few steps.

- Making a set of scenarios (which we call "experiences") that we want to run on every change (or Pull Request) or on a regular cadence.

- Packaging our robot simulation code into a Docker container to be easily run on the cloud.

- Registering our scenarios and robot simulation code with the ReSim app so it can run them.

- Setting up a continuous integration action (e.g. a Github Action) to enable automated triggering of the simulations and blocking when they fail.

The subsequent articles will cover in detail how to accomplish these steps. While the use of such simulations definitely do not obviate the need for rigorous real-world testing, they allow developers to minimize breakages and make improvements more quickly and confidently.

How do I start?

You will need a to set up a few things in order to get working with your own tests. Following this guide will get you set up.

We advise you have our Core Concepts guide handy as we use some specific definitions.

Once you're set up, we have a number of User Guides to walk you through the features.